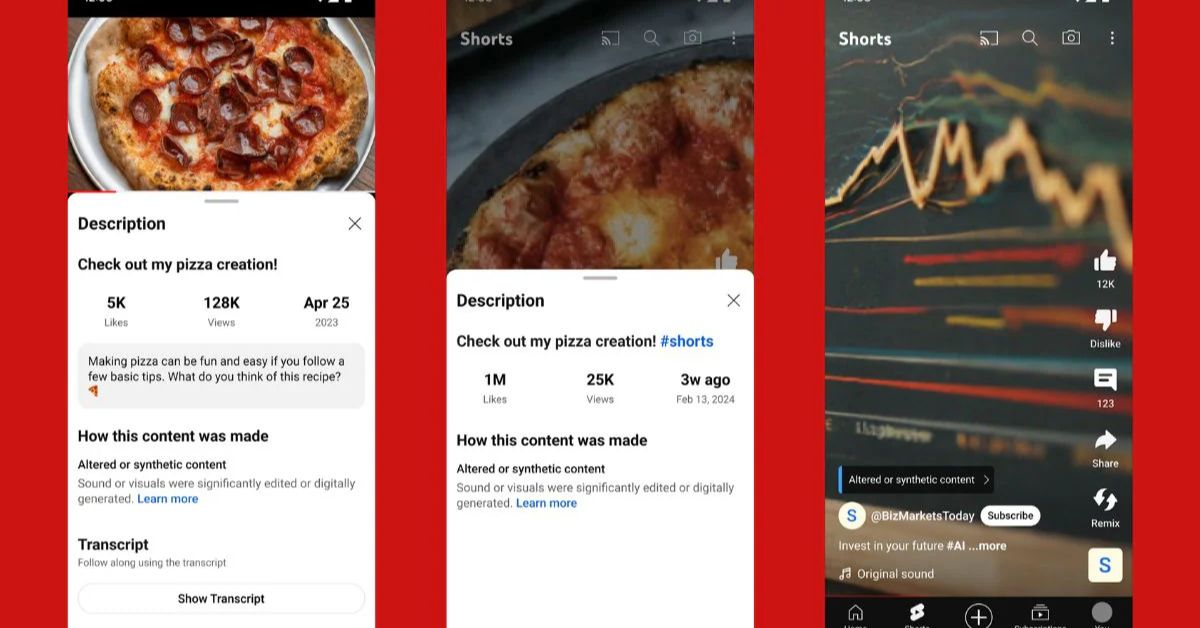

YouTube has added that the users will have to identify whether the uploaded videos contain synthetic or manipulated media, including those made by AI. The video will also get a label by ‘sensitive topics’ themselves, which may pertain to health, news, elections, or finance.

“Starting Monday, the company said it will prompt people uploading new videos to answer ‘Yes’ or ‘No’ to whether their videos contain altered content.

The site would prompt if the content falls within these: “Makes a real person appear to say or do something they didn’t say or do,” “Alters footage of a real event or place,” or “Generates a realistic-looking scene that didn’t actually occur.” When a user indicates “Yes,” YouTube flags the video description with “Altered or synthetic content.”

According to YouTube, the new feature will go live on Monday. However, following YouTube’s announcement, NBC News tried to upload a new video to the channel; that is when we found the new feature was not available yet.

The change is announced as tech companies struggle to combat the spiraling issue of AI-generated misinformation online.

Nonetheless, belonging to Google, YouTube is now a platform of hundreds of thousands of videos, be they purely machined or at times involving AI elements. A January investigation by NBC News detailed hundreds of videos uploaded since 2022 using AI tools to spread fake news about black celebrities.

Most of the videos used AI-generated audio narration, which can be done far more quickly and at a fraction of the price of hiring a human actor to read from a script.

You team also also, share this news via tweet-

we're rolling out a new tool in Studio for creators to disclose if their content is meaningfully altered or synthetic and seems realistic.

in order to strengthen trust & transparency on YouTube, we’re requiring creators to disclose this info when a viewer could easily mistake…

— TeamYouTube (@TeamYouTube) March 18, 2024

Not all the examples NBC News found would meet the new rules to be labeled as synthetic content by YouTube. For example, using AI text-to-speech technology for creating a voice-over does not have to be labeled, unless this resulting video is aiming to mislead the viewers with a “realistic” but fake voice of a real person being impersonated.

“We are not requiring creators to disclose content that is clearly unrealistic, animated, includes special effects, or has used generative AI for production assistance,” YouTube said in a post Monday. We won’t require creators to disclose if the use case involves generative AI used for productivity, such as generating scripts, content ideas, or automatic captions.”

The company said it would first roll out these changes on its mobile application with labels “over the next several weeks,” followed by desktop and then YouTube TV. Going forward, without specifying a timeline, YouTube told users that it will “penalize” those “who consistently choose not to disclose this information.” It also said the label may be added by YouTube where it believes the resulting unlabeled content could “confuse or mislead people.”

That’s even as YouTube has made and then undone efforts to contain the wave of AI-generated content designed to mislead viewers that’s already in place on its platform. Its parent company, Google, meanwhile, has continued to forge ahead with releasing consumer AI products, such as its AI image generator Gemini

. Gemini, from Google, was also under criticism for churning out misleading historical images that showed people who were not white in scenes they are not supposed to be in, like those in Nazi uniforms or the 1800s U.S. Congress. In response, Google temporarily limited Gemini’s ability to create images of people.

More news: